Avatar is a wonder of modern technology. We've all heard about, some have seen it, and we all agree that James Cameron worked bloody hard to make this movie the most advanced visual experience to grace the silver screen since the first frame of superficial animation. In ten years, Cameron has reinvented the idea of 3D cinema, the art of Computer Graphics, and the role of the real in the unreal. He spearheaded the development of audio/visual technology in ways nobody had imagined previously, and he had the cojones to spend more money on a movie than anybody else in history. Though it is entertainment, it is also a ballsy display of modern innovation and technological dominance that sets the bar higher for everyone else.

This is horribly ironic, this being the most anti-technology, environmentalist, tree-hugging, machines-are-evil, meditative-nature-religion, save-the-rainforest, movie anyone could possible dream of. It's funny that the movie that most exhibits the power of the non-limits of human innovation is its own worst enemy. I mean, look at the numbers:

- Rendering the movie: 8 gigabytes/sec, 24 hours a day, over a period of months.

- Storing the movie: Each minute of film comes to around 18 GB.

- Processing Power: A feat of parallel processing, 34 racks of 32 quad-core processors each (34 x 32 x 4 = 4352 processors!). All running at 8Gb/sec for 24 hours/day.

That's pretty intense for a movie with an environmentalist message, don't you think?

Then I read a New York Times Op-Ed by Neal Stephenson. Here's the link. It talks about Star Wars, and the subtle war between geeking out and vegging out. It's a very interesting article, but it poses a very bleak scenario for the technologists of the world (He even uses that word! Yes!). He writes that the Jedi Knights were the geeks of the Star Wars universe. They were the ones that everyone depended on to make things work, to know all the arcane knowledge there is to know. They were the ones called in to solve the hard problems, and they were well-versed in many aspects of technology, from flying warships to building light sabers. If you read a lot of the back stories of Star Wars, the various novels and off-shoots of the main cinema canon, you realize that the Jedi were hated by most simply because they were very good at what they did, they were masters of their world, and they were necessary for the world to function properly. This resentment is perfectly modeled through the writing of the last three episodes (episodes I, II, and III). The Jedi way is increasingly attributed to meditation and "trusting your feelings" than to the study of warfare and calculus. Stephenson explains this as the writers writing what the viewers want to see. They want to see the abilities and powers of the Jedi as a product of meditation, something that anybody can do, as opposed to a product of years of training and study.

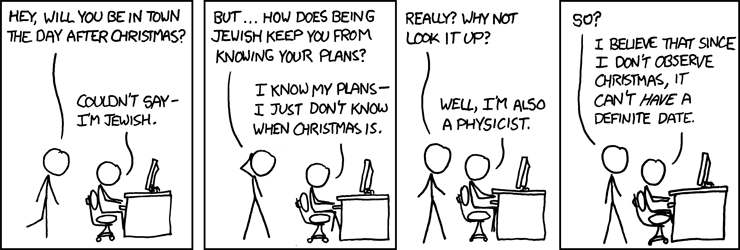

It's really nice to see on the screen that just by "trusting your feelings" you can deftly pilot a dog-fighting warship into the laser-riddled hellstorm in the beginning of episode III and come out victorious. or that winning a pod race is a simple as flipping some switches and "trusting your instincts".

In reality, technology is hard. Too many "geeks" of the world believe that playing World of Warcraft in weeklong marathons makes you a master of technology. They are falling into the cultural trap that says you don't have to try to be who you want to be. You just have to look and talk like that person and you can become that. You can now be part of the high-tech community simply by using certain Internet memes a lot and by knowing certain movie scripts by heart. There's a reason why the science nerds are all becoming Asian. It's because Americans have stopped caring about the effort it takes to run our world and control its minutiae. Students are flocking to liberal arts majors instead of taking the more challenging route and excelling in something important. Biology is giving way to psychology, physics to journalism, and Computer Science to MIS (I'm guilty of that one). We want all the perks of intellectual dominance, but we are lulled into the dream that simply acting intelligent is a valid substitute for being intelligent.

So, James Cameron bothers me even more now. Seen in this light, the Na'vi in the movie are naturally gifted masters of their environment, able to interface with many complicated aspects of animal and floral life via a "wire" of sorts that can attach to all kinds of things in the wild. For example, banshee riding is made simple through a natural neural connection between banshee and rider that allows the rider to control the animal with thought alone. No training required. They are masters but they never have to try. They just need to "be one with nature" and all of a sudden they are the masters of their world. The humans, on the other hand, have obviously made great leaps and bounds in technology to do what they do on the planet of Pandora. We see snippets of it in the form of the Avatar technology and the military prowess, but we can only imagine what Earth looks like at this point in history. They are the villain in this story. Avatar shows us that progress only leads to destruction and genocide. Only by communing with nature, and by "being one with the wild" can we ever hope to achieve any kind of moral civilization.

Audiences will eat this up. The techies, the ones responsible for running the stuff that keeps us alive, they're the evil ones. The guys that train for years, the ones that are steeped in knowledge and experience, the ones that apply what they have years learning to everyday life, the people who try to make progress, they're the evil ones. If only we didn't have to depend on those people to survive; if we could be more like the Na'vi, not having to work for mastery, then we would do no evil.

Ironic, coming from the guy in Hollywood who is arguably the most steeped in cutting-edge technology and money. I'm not sure what the message is supposed to be regarding all this, but I found the irony....ironic.